[ad_1]

Deep neural networks have fueled significant progress in generative AI, yet their architecture challenges achieving optimal efficiency. Discover how IBM Research draws inspiration from the human brain to enhance digital cognitive systems.

Deep neural networks are responsible for many advancements in generative artificial intelligence (AI). Their design incorporates a structure that acts as a virtual obstacle, preventing the attainment of optimal efficiency. The architecture, consisting of distinct modules for memory and processing, places substantial burdens on system resources when facilitating communication between these components. This leads to slower processing speeds and diminished overall efficiency.

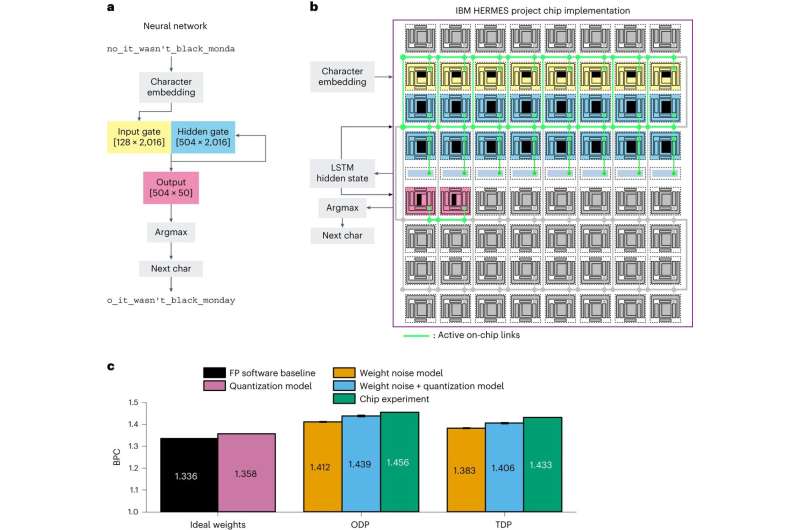

Drawing inspiration from the most efficient model, the human brain, IBM Research has devised a solution to enhance the efficiency of digital cognitive systems. They introduced a 64-core mixed-signal in-memory compute chip based on phase-change memory for deep neural network inference. This approach involves the creation of a mixed-signal AI chip that holds the potential to elevate efficiency levels while minimising battery consumption in AI endeavors.

“The human brain demonstrates performance while maintaining low power consumption,” remarked Thanos Vasilopoulos, a study co-author from IBM’s research lab in Zurich, Switzerland. Mirroring the interplay of synapses within the brain, a mixed-signal chip comprises 64 analog in-memory cores, each housing an array of synaptic cell units. To ensure seamless shifts between analog and digital states, converters are employed. The chips achieved an accuracy rate of 92.81%

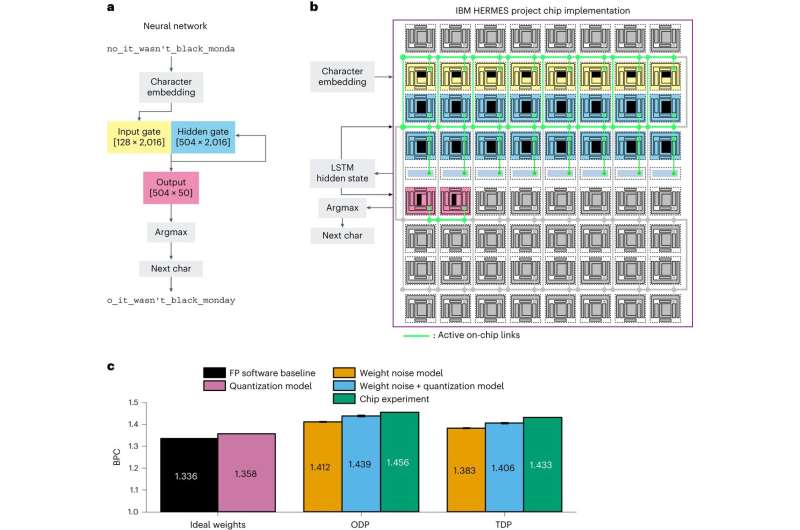

The research group showcased inference accuracy close to software-based equivalents using ResNet (residual neural network) and long short-term memory networks. ResNet is a deep learning model that enables training across numerous neural network layers without compromising performance. Integrating analog in-memory computing (AIMC) and on-chip digital operations and communication is imperative to attain comprehensive enhancements in both latency and energy efficiency. Their findings encompass a multicore AIMC chip crafted and produced using 14 nm complementary metal–oxide semiconductor technology, featuring integrated back-end phase-change memory.

The heightened performance opens the door for the execution of extensive and intricate workloads in settings characterised by limited power or battery resources. This encompassing capability extends to applications in cell phones, automobiles, and cameras. The cloud service providers stand to benefit by utilising these chips to curtail energy expenses and minimise their environmental impact.

Through this endeavour, numerous components crucial for realising the complete potential of Analog-AI, ensuring high-performance and energy-efficient AI, have been validated in silicon. An unprecedented fully integrated mixed-signal in-memory compute chip that relies on back-end integrated phase-change memory (PCM) within a 14-nm complementary metal-oxide-semiconductor (CMOS) process.

Comprising 64 AIMC cores, each equipped with a memory array containing 256×256 unit cells, these cells are meticulously assembled using four PCM devices, totalling over 16 million devices. In conjunction with the analog memory array, each core integrates a lightweight digital processing unit that performs activation functions, accumulations, and scaling operations.”

Source link