[ad_1]

MIT scientists create a tailored onboarding method to educate humans on discerning the reliability of model advice.

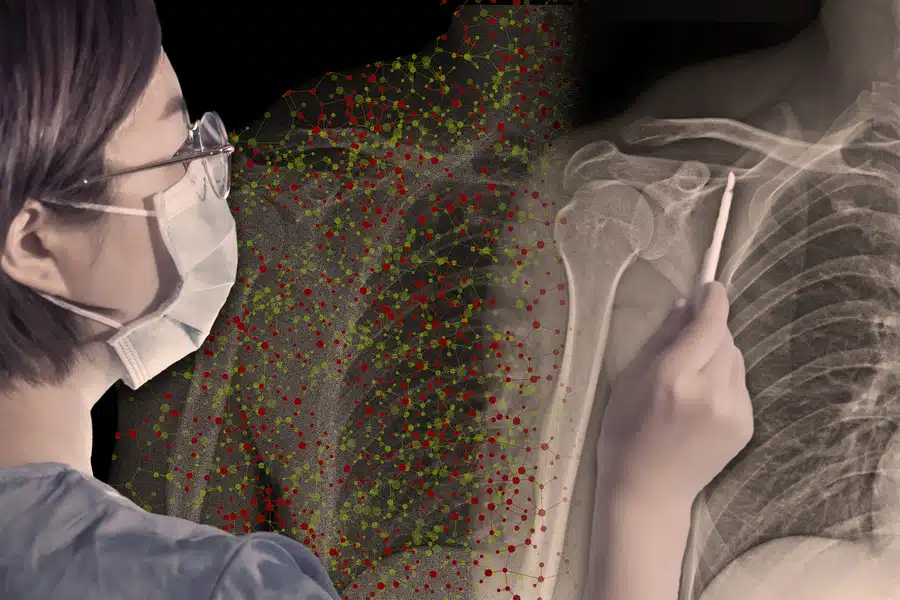

In the realm of medical imaging, artificial intelligence excels in identifying patterns within images, surpassing human capabilities in many instances. However, a critical question arises for radiologists relying on AI models to assist in discerning pneumonia from X-ray scans that when should they heed the AI’s guidance, and when should they exercise caution.

Researchers at MIT and the MIT-IBM Watson AI Lab propose that a personalised onboarding procedure could solve this dilemma for radiologists. They have devised a system designed to instruct users on when to engage with an AI assistant effectively. This training approach can identify scenarios in which the radiologist might mistakenly rely on the model’s guidance when it is, in fact, incorrect. The system autonomously derives guidelines for optimal collaboration with the AI and conveys them using natural language descriptions.

The research findings indicate that this onboarding approach resulted in a notable 5 percent enhancement in accuracy when humans and AI teamed up for image prediction tasks. Significantly, the study underscores that merely instructing the user when to trust the AI without proper training led to inferior outcomes. The researchers’ system is entirely automated, learning to create the onboarding process by analysing data generated from human-AI interactions in specific tasks. It is also adaptable to diverse tasks, making it applicable in a broad spectrum of scenarios where humans and AI models collaborate, such as social media content moderation, content creation, and programming.

The team observes that AI often lacks such guidance unlike most other tools, which typically come with tutorials. They aim to address this problem from both methodological and behavioural angles. They anticipate that incorporating this onboarding approach will become an indispensable component of training for medical professionals, offering valuable support in leveraging AI tools effectively in their field. They envisions a scenario where doctors relying on AI for treatment decisions will undergo training akin to the approach they propose. This could necessitate a reevaluation of various aspects, ranging from ongoing medical education to the fundamental design of clinical trials.

An evolving approach to training

Traditional onboarding methods for human-AI collaboration rely on specialized training materials created by human experts, which hampers their scalability. Alternative approaches involve using AI explanations to convey decision confidence, but these have often proven ineffective. As AI capabilities continually evolve, the range of potential applications where human-AI partnership is beneficial expands over time. Simultaneously, user perceptions of AI models change. Consequently, a training procedure must adapt to these dynamics. To address this, an autonomous onboarding method is generated from data. It starts with a dataset featuring numerous instances of a task, such as identifying traffic lights in blurry images. Initially, the system gathers data from human-AI interactions, where the human collaborates with AI to predict the presence of traffic lights. The system then maps these data points to a latent space, where similar data points are grouped together. An algorithm identifies regions within this space where human-AI collaboration deviates from correctness, capturing instances where the human mistakenly trusted the AI’s prediction, such as in the case of nighttime highway images.

Elevated Accuracy Through Onboarding

Researchers tested their system on two tasks: spotting traffic lights in blurry images and answering diverse multiple-choice questions (e.g., biology, philosophy, computer science). Users initially received an information card detailing the AI model, its training, and performance across categories. Five user groups were formed: one saw only the information card, another underwent the researchers’ onboarding, a third followed a basic onboarding, a fourth combined researchers’ onboarding with trust recommendations, and the final group received recommendations alone.

Surprisingly, the researchers’ onboarding alone significantly boosted user accuracy, particularly in the traffic light prediction task, with about a 5% improvement, and it didn’t slow them down. However, it was less effective for question-answering, possibly due to ChatGPT’s explanation of trustworthiness with each answer. Conversely, giving recommendations without onboarding had negative consequences—users performed worse and took more time, likely due to confusion and resistance when told what to do.

Source link